As machine learning has become a fundamental workload in today's digital systems, there has been increasing demand for highly efficient hardware to run these workloads. The work done at the Intelligent Digital Systems Lab at Imperial College London aims to address this demand with fpgaConvNet: a toolflow for designing Convolutional Neural Network (CNN) accelerators for FPGAs with state-of-the-art performance and efficiency. FPGA devices have long been considered a highly performant and efficient platform, where a designer can exploit the highly configurable fine-grain building blocks to produce customised hardware. However, many current FPGA designs for AI applications do not exploit these features, opting for a monolithic accelerator approach with very little specialisation towards the particular workload. With the fpgaConvNet toolflow, accelerator designs are customised to a specific CNN workload, mapping each operation in the CNN model to a dedicated hardware block. This leads to a deeply pipelined design with extremely high throughput and ultra low latency. The toolflow can be used to accelerate a number of applications, such as the ones listed below.

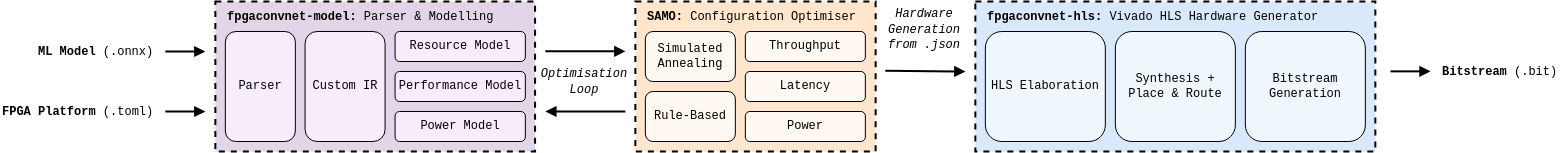

The fpgaConvNet framework operates on an intermediate representation that describes the hardware of a mapped ML model. This component of the framework takes onnx files and creates the fpgaconvnet-ir used to generate hardware. Furthermore, the IR can also be used to generate high-level performance and resource models of the hardware. This IR is fundamental for rapid design space exploration.

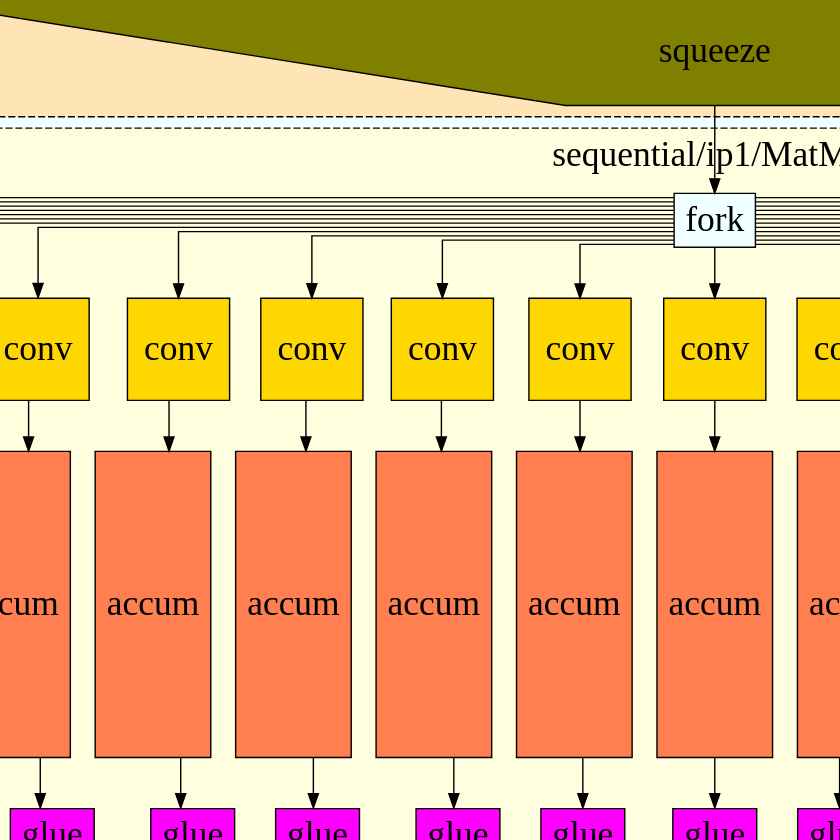

Using a configuration generated by the fpgaconvnet-model tool, the corresponding hardware can be generated by the fpgaconvnet-hls tool. This repository contains of selection of highly parametrised hardware building blocks for common CNN layers (Convolution, Pooling, ReLU, etc). A dataflow architecture is generated by instantiating and connecting these building blocks together based on the configuration file.

The design space of streaming architectures are immense, with even seemingly small networks such as LeNet having 1013 possible design points, taking 89 centuries to evaluate every single one. The fpgaConvNet toolflow allows designers to automate the design point selection process, using optimisation solvers tailored to the problem. SAMO is a framework that generalises the optimisation problem across streaming architectures, providing a toolflow for both FINN and HLS4ML. the fpgaconvnet-optimiser project is specialised to the fpgaConvNet architecture, finding even better design points.

A series of tutorials have been created as a learning resource for those wishing to familiarise themselves with the toolflow. These can be found in the fpgaconvnet-tutorial repository on GitHub. The repository contains the following tutorials,

End-to-End Example: covers the steps of parsing an ONNX graph and interfacing with its performance and resource models. It then shows the steps of optimising this hardware description, and generating an IP from it.

Hardware Tutorial: gives the necessary steps to integrate the IP into an example system, which is then used to create a bitstream. Example software is then given to collect performance results for the generated hardware.

YOLOv5 Acceleration: has instructions on running a YOLOv5 accelerator built by the fpgaConvNet tool using the PYNQ framework. This design won the AMD open hardware competition in 2023.

To understand how the architecture generated by fpgaConvNet as well as the optimisation steps taken, an interactive visualisation of the design space exploration is displayed below. By moving the interactive slide bar, the state of the optimised design can be seen. The performance graph shows the change in performance in throughput and latency across the optimisers steps, with the current visualised point highlighted. Likewise, the resources and block diagram for the chosen point are also shown. This visualisation is created for the model LeNet targeting a ZedBoard.

The results presented in this table are from our submission to MLPerf Tiny's benchmark, as part of the round v1.1 results. The MLPerf Tiny v1.1 submission demonstrates the versatility of the fpgaConvNet toolflow by targeting a range of low-cost FPGAs, whilst achieving ultra low latency across these devices. The obtained high performance is due to the exploration of the reconfigurability feature of FPGAs, allowing the tool to create highly tailored accelerator designs for each specific task and device. fpgaConvNet showcases the potential of FPGA devices for TinyML applications, as performance similar to that of ASICs is achieved whilst having the programmability of MCUs.

| Device | Task | Latency | LUT | DSP | BRAM | Freq. | |

|---|---|---|---|---|---|---|---|

| ZC706 | Image Classification | 0.15 ms | 108K | 564 | 281 | 187 MHz | link |

| Visual Wake Word | 0.72 ms | 133K | 564 | 366 | 200 MHz | link | |

| ZedBoard | Image Classification | 0.41 ms | 47K | 211 | 93 | 143 MHz | link |

| Visual Wake Word | 9.49 ms | 34K | 189 | 123 | 111 MHz | link | |

| Keyword Spotting | 0.32 ms | 37K | 188 | 97 | 143 MHz | link | |

| ZyBo | Image Classification | 3.15 ms | 16K | 78 | 36 | 125 MHz | link |

| Keyword Spotting | 2.15 ms | 15K | 60 | 16 | 125 MHz | link | |

| Cora-Z7 | Keyword Spotting | 4.21 ms | 13K | 55 | 28 | 143 MHz | link |

Instructions on how to use the bitstream can be found in the MLPerf-Tiny repo.

Since the initial conception of the fpgaConvNet toolflow, there have been many research projects which have contributed to and utilised the framework. The core development of the toolflow has lead to innovations in Streaming Architecture design as well as Design Space Exploration. This in turn has enabled novel contributions in several application domains, broadening the scope of the toolflow. The growing list of research outcomes are listed below.

Stylianos I. Venieris and Christos-Savvas Bouganis

The original paper detailing the design principles of fpgaConvNet, such as the application of Synchronous Dataflow (SDF) performance modelling for streaming architectures, and high-level resource estimation. This work also explores the use of bitstream reconfiguration for high-throughput applications.

Stylianos I. Venieris and Christos-Savvas Bouganis

This work explores methods of targeting latency for fpgaConvNet by constraining the design to a single bitstream, and making use of runtime weight parameter reconfiguration.

Stylianos I. Venieris and Christos-Savvas Bouganis

The scope of fpgaConvNet is expanded to support more irregular network styles such as GoogleNet and ResNet, by including element-wise and concatenation layers in the design. This work addresses the design space exploration aspect of including multiple branches in the model.

Alexander Montgomerie-Corcoran and Christos-Savvas Bouganis

This work explores the power consumption aspect of fpgaConvNet, producing high-level models of how design choices affect the power consumption of the accelerator.

Alexander Montgomerie-Corcoran and Christos-Savvas Bouganis

In this paper, a novel coding scheme is introduced which exploits the properties of feature maps to reduce the activity along off-chip memory data busses, leading to lower system power consumption.

Zhewen Yu and Christos-Savvas Bouganis

This work looks into methods of compressing ML models by using Singular Value Decomposition (SVD) to reduce the number of weight parameters required. fpgaConvNet was used to show how this method benefits ML accelerators.

Alexander Montgomerie-Corcoran*, Zhewen Yu* and Christos-Savvas Bouganis

This work builds on the capabilities of fpgaConvNet's design space exploration, by proposing two optimisers (simulated annealing and rule-based), as well as expanding the scope to other FPGA-based ML accelerator frameworks, namely FINN and HLS4ML.

Petros Toupas*, Alexander Montgomerie-Corcoran*, Christos-Savvas Bouganis, Dimitrios Tzovaras

A new architecture based off of the design principles of fpgaConvNet is developed in this work, but has the benefit of runtime parameter reconfiguration. This makes it suitable for low-latency applications as it avoids the need to do full bitstream reconfiguration, which is necessary for Human Action Recognition (HAR).

Benjamin Biggs, Christos-Savvas Bouganis, George A. Constantinides

ATHEENA looks into using early exit networks with fpgaConvNet, and the challenges associated with handling early exit branches.

Petros Toupas, Christos-Savvas Bouganis, Dimitrios Tzovaras

This study bridges the gap between recent developments in computer vision on videos and their deployment and applications on FPGAs by optimising and mapping a state-of-the-art 3D-CNN model (X3D) for human action recognition (HAR).

Alexander Montgomerie-Corcoran*, Zhewen Yu*, Jianyi Cheng, Christos-Savvas Bouganis

this work addresses the challenges associated with exploiting post-activation sparsity for performance gains in streaming CNN accelerators.

Petros Toupas, Christos-Savvas Bouganis, Dimitrios Tzovaras

Optimising and mapping state-of-the-art 3D-CNN models for human action recognition (HAR) while aiming for throughput-oriented designs.